Tutorial on how to make a mini agent using langgraph

Prerequisites

I'm working on an Ubuntu 24 with a langgraph 0.2.60 for good measure. This is also not an introduction to LangChain or LangGraph and you are expected to have a basic understanding of these two libraries.

What is a "ReAct Agent"

No official definition of "agent" exists, but one can think of an Agent as a system that uses an LLM to reason through a problem and execute a solution. This could be a system that given a question has to determine if the system already has enough information to answer the question or if a specialized tool should be used to gather more information before answering the original question.

Example mini-agent

We will make a small mini-agent that can use one of two tools to find information about Star Wars characters. We can ask questions about characters, such as "How much older is Han Solo than Luke Skywalker?" and the agent will determine which tool to use, if any, and answer the question if the system has enough information available.

Some imports are needed:

from langchain_openai import ChatOpenAI

import requests

from langchain_community.tools import DuckDuckGoSearchRun

from langgraph.graph import MessagesState, START, StateGraph

from langchain_core.messages import HumanMessage, SystemMessage

from langchain_core.tools import tool

from langgraph.prebuilt import tools_condition, ToolNode

from IPython.display import Image, display

llm = ChatOpenAI(model="gpt-4o")

We will create 2 tools, one custom that calls a StarWars API and one search tool:

@tool

def get_character_info(id: int) -> dict:

"""Returns information about a specific star wars character

Args:

id: The ID in the StarWarsAPI for the character

ids of characters:

1: Luke Skywalker

14: Han Solo

Returns:

dict with keys like: name, height, mass, hair_color, homeworld, starships (as a list)

and others.

"""

r = requests.get(f"https://swapi.info/api/people/{id}")

return r.json()

search = DuckDuckGoSearchRun()

An example response from the api tool:

get_character_info.invoke({"id": 12})

{'name': 'Han Solo',

'height': '180',

'mass': '80',

'hair_color': 'brown',

'skin_color': 'fair',

'eye_color': 'brown',

'birth_year': '29BBY',

'gender': 'male',

'homeworld': 'https://swapi.info/api/planets/22',

'films': ['https://swapi.info/api/films/1',

'https://swapi.info/api/films/2',

'https://swapi.info/api/films/3'],

'species': [],

'vehicles': [],

'starships': ['https://swapi.info/api/starships/10',

'https://swapi.info/api/starships/22'],

'created': '2014-12-10T16:49:14.582000Z',

'edited': '2014-12-20T21:17:50.334000Z',

'url': 'https://swapi.info/api/people/14'}

We will then bind the tools to the llm, that way, the llm will make use of the tools during any invocations of the llm.

tools = [get_character_info, search]

llm_with_tools = llm.bind_tools(tools)

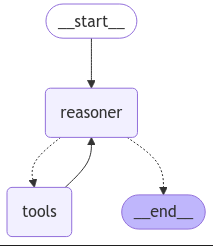

Next up is creating our LangGraph nodes, edges and prompts:

sys_message = SystemMessage(content="You are a useful assistant tasked with search and finding information about StarWars characters")

# Node

def reasoner(state: MessagesState):

return {

"messages": [llm_with_tools.invoke([sys_message] + state["messages"])]

}

builder = StateGraph(MessagesState)

builder.add_node("reasoner", reasoner)

builder.add_node("tools", ToolNode(tools))

builder.add_edge(START, "reasoner")

builder.add_conditional_edges(

"reasoner",

tools_condition,

)

builder.add_edge("tools", "reasoner")

react_graph = builder.compile()

# display(Image(react_graph.get_graph(xray=True).draw_mermaid_png())) # If you wanna visualize the graph

All we need now is to run the graph:

messages = [HumanMessage(content="What is the height of Luke Skywalker and how much older is he than Han Solo?")]

messages = react_graph.invoke({"messages": messages})

for m in messages["messages"]:

m.pretty_print()

================================[1m Human Message [0m=================================

What is the height of Luke Skywalker and how much older is he than Han Solo?

==================================[1m Ai Message [0m==================================

Tool Calls:

get_character_info (call_1zTTl9jvOrSeO0SUPD7OvbdR)

Call ID: call_1zTTl9jvOrSeO0SUPD7OvbdR

Args:

id: 1

get_character_info (call_hzbqd5v69htk9aBoK9wo2489)

Call ID: call_hzbqd5v69htk9aBoK9wo2489

Args:

id: 14

=================================[1m Tool Message [0m=================================

Name: get_character_info

{"name": "Luke Skywalker", "height": "172", "mass": "77", "hair_color": "blond", "skin_color": "fair", "eye_color": "blue", "birth_year": "19BBY", "gender": "male", "homeworld": "https://swapi.info/api/planets/1", "films": ["https://swapi.info/api/films/1", "https://swapi.info/api/films/2", "https://swapi.info/api/films/3", "https://swapi.info/api/films/6"], "species": [], "vehicles": ["https://swapi.info/api/vehicles/14", "https://swapi.info/api/vehicles/30"], "starships": ["https://swapi.info/api/starships/12", "https://swapi.info/api/starships/22"], "created": "2014-12-09T13:50:51.644000Z", "edited": "2014-12-20T21:17:56.891000Z", "url": "https://swapi.info/api/people/1"}

=================================[1m Tool Message [0m=================================

Name: get_character_info

{"name": "Han Solo", "height": "180", "mass": "80", "hair_color": "brown", "skin_color": "fair", "eye_color": "brown", "birth_year": "29BBY", "gender": "male", "homeworld": "https://swapi.info/api/planets/22", "films": ["https://swapi.info/api/films/1", "https://swapi.info/api/films/2", "https://swapi.info/api/films/3"], "species": [], "vehicles": [], "starships": ["https://swapi.info/api/starships/10", "https://swapi.info/api/starships/22"], "created": "2014-12-10T16:49:14.582000Z", "edited": "2014-12-20T21:17:50.334000Z", "url": "https://swapi.info/api/people/14"}

==================================[1m Ai Message [0m==================================

Luke Skywalker is 172 cm tall. He was born in 19BBY, while Han Solo was born in 29BBY, making Han Solo 10 years older than Luke Skywalker.

So, what actually happend here?

Create tools

In the tools section we are creating two different tools, one custom and one buildin from langchain_community.tools. Tools in Langchain are essentially just function calls, eg. they are a way for LLMs to use functions. There are different ways to create tools in Langchain, in our example we simply made a function with a docstring. The docstring then describes what the tool does and is used by the language model to determine if and how to tool should be used.

Bind tools to LLM

with the method "bind_tools" on the llm model, we can add a list of different tools to the llm. This way, the llm is made aware of these tools and will use them if appropriate. If you just run the "llm_with_tools" and a query, the LLM will not actually run the tool, it will simply create the necessary arguments for the tool calling, and then it is up to the user/caller to actually invoke the tool.

r = llm_with_tools.invoke("Tell me about Luke Skywalker")

AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_nejexqzJTsIxoEhbJ202YS5l', 'function': {'arguments': '{"id":1}', 'name': 'get_character_info'}, 'type': 'function'}], 'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 16, 'prompt_tokens': 168, 'total_tokens': 184, 'completion_tokens_details': {'accepted_prediction_tokens': 0, 'audio_tokens': 0, 'reasoning_tokens': 0, 'rejected_prediction_tokens': 0}, 'prompt_tokens_details': {'audio_tokens': 0, 'cached_tokens': 0}}, 'model_name': 'gpt-4o-2024-08-06', 'system_fingerprint': 'fp_d28bcae782', 'finish_reason': 'tool_calls', 'logprobs': None}, id='run-5962e772-d42b-4838-bbde-6f307d9afb73-0', tool_calls=[{'name': 'get_character_info', 'args': {'id': 1}, 'id': 'call_nejexqzJTsIxoEhbJ202YS5l', 'type': 'tool_call'}], usage_metadata={'input_tokens': 168, 'output_tokens': 16, 'total_tokens': 184, 'input_token_details': {'audio': 0, 'cache_read': 0}, 'output_token_details': {'audio': 0, 'reasoning': 0}})

One can access the "tool_calls" property on the AIMessage returned from the llm_with_tools call to see which function should be called and with which arguments.